[ad_1]

Amid the festivities at its fall 2022 GTC convention, Nvidia took the wraps off new robotics-related {hardware} and providers aimed toward firms creating and testing machines throughout industries like manufacturing. Isaac Sim, Nvidia’s robotics simulation platform, will quickly be out there within the cloud, the corporate stated. And Nvidia’s Jetson lineup of system-on-modules is increasing with Jetson Orin Nano, a system designed for low-powered robots.

Isaac Sim, which launched in open beta final June, permits designers to simulate robots interacting with mockups of the actual world (suppose digital re-creations of warehouses and manufacturing facility flooring). Customers can generate knowledge units from simulated sensors to coach the fashions on real-world robots, leveraging artificial knowledge from batches of parallel, distinctive simulations to enhance the mannequin’s efficiency.

It’s not simply advertising and marketing bluster, essentially. Some analysis means that artificial knowledge has the potential to handle most of the improvement challenges plaguing firms trying to operationalize AI. MIT researchers just lately discovered a technique to classify images using synthetic data, and practically each main autonomous automobile firm uses simulation data to complement the real-world knowledge they accumulate from vehicles on the highway.

Nvidia says that the upcoming launch of Isaac Sim — which is out there on AWS RoboMaker and Nvidia NGC, from which it may be deployed to any public cloud, and shortly on Nvidia’s Omniverse Cloud platform — will embody the corporate’s real-time fleet job project and route-planning engine, Nvidia cuOpt, for optimizing robotic path planning.

“With Isaac Sim within the cloud … groups may be situated throughout the globe whereas sharing a digital world through which to simulate and practice robots,” Nvidia senior product advertising and marketing supervisor Gerard Andrews wrote in a weblog put up. “Operating Isaac Sim within the cloud implies that builders will not be tied to a strong workstation to run simulations. Any machine will be capable to arrange, handle and overview the outcomes of simulations.”

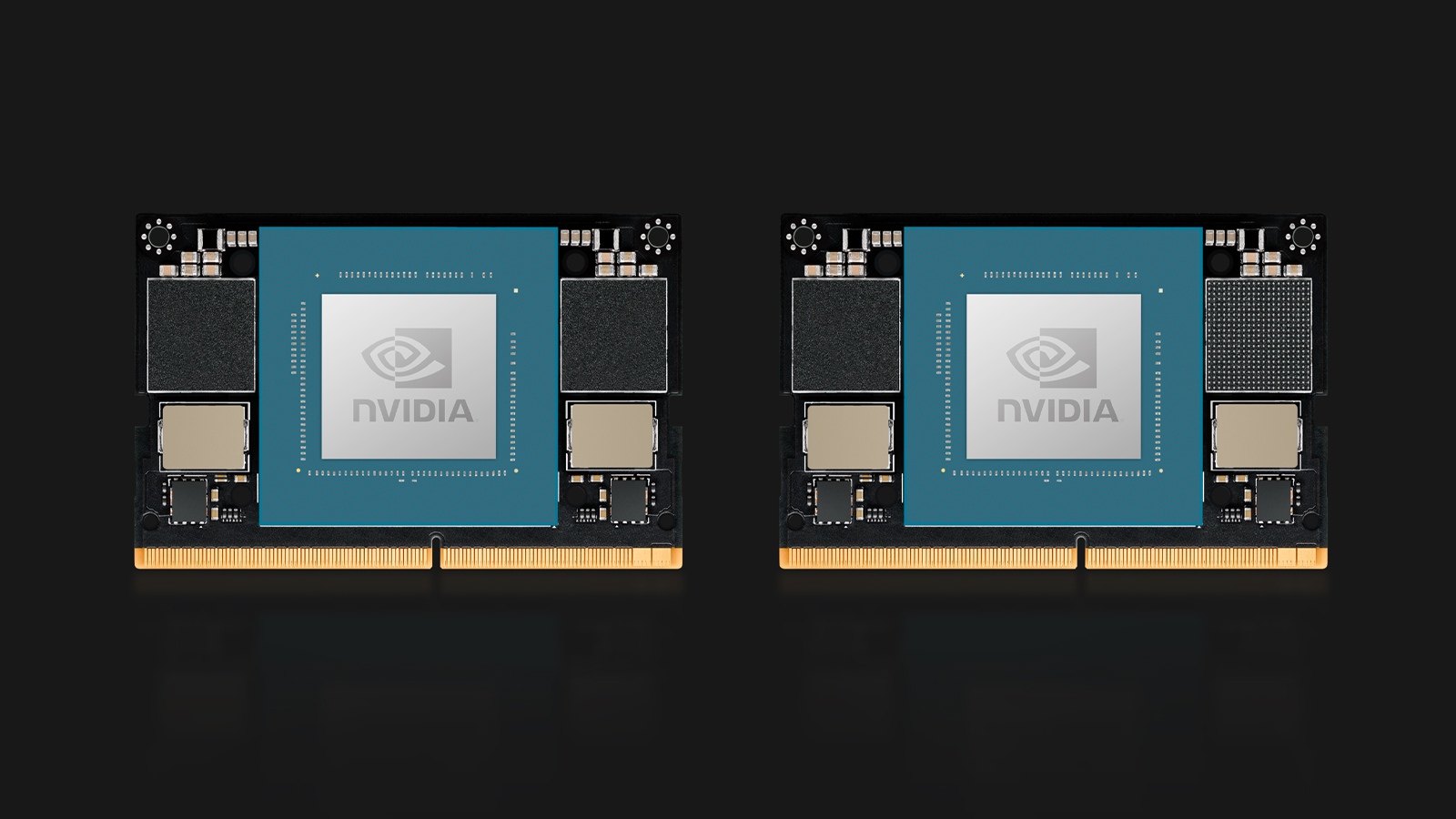

Jetson Orin Nano

Again in March, Nvidia launched Jetson Orin, the following technology of the corporate’s Arm-based, single-board PCs for edge computing use instances. The primary within the line was the Jetson AGX Orin, and Orin Nano expands the portfolio with extra inexpensive configurations.

Picture Credit: Nvidia

The aforementioned Orin Nano delivers as much as 40 trillion operations per second (TOPS) — the variety of computing operations the chip can deal with at 100% utilization — within the smallest Jetson kind issue so far. It sits on the entry-level aspect of the Jetson household, which now consists of six Orin-based manufacturing modules meant for a variety of robotics and native, offline computing functions.

Coming in modules suitable with Nvidia’s beforehand introduced Orin NX, the Orin Nano helps AI software pipelines with Ampere structure GPU — Ampere being the GPU structure that Nvidia launched in 2020. Two variations will probably be out there in January beginning at $199: The Orin Nano 8GB, which delivers as much as 40 TOPS with energy configurable from 7W to 15W, and the Orin Nano 4GB, which reaches as much as 20 TOPS with energy choices as little as 5W to 10W.

“Over 1,000 clients and 150 companions have embraced Jetson AGX Orin since Nvidia introduced its availability simply six months in the past, and Orin Nano will considerably broaden this adoption,” Nvidia VP of embedded and edge computing Deepu Talla stated in a press release. (By comparability to the Orin Nano, the Jetson AGX Orin prices properly over a thousand {dollars} — evidently, a considerable delta.) “With an orders-of-magnitude improve in efficiency for hundreds of thousands of edge AI and [robotics] builders Jetson Orin Nano units new commonplace for entry-level edge AI and robotics.”

Source link