[ad_1]

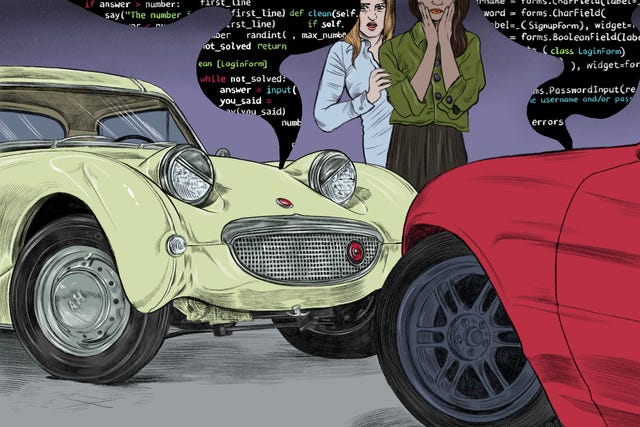

Illustration by Dilek BaykaraAutomobile and Driver

From the September 2022 problem of Automobile and Driver.

Early in June, Blake Lemoine, an engineer at Google engaged on synthetic intelligence, made headlines for claiming that the corporate’s Language Mannequin for Dialogue Purposes (LaMDA) chat program is self-aware. Lemoine shared transcripts of his dialog with LaMDA that he says show it has a soul and must be handled as a co-worker moderately than a software. Fellow engineers had been unconvinced, as am I. I learn the transcripts; the AI talks like an annoying stoner at a university get together, and I am optimistic these guys lacked any self-awareness. All the identical, Lemoine’s interpretation is comprehensible. If one thing is speaking about its hopes and goals, then to say it would not have any appears heartless.

For the time being, our automobiles do not care whether or not you are good to them. Even when it feels unsuitable to go away them soiled, permit them to get door dings, or run them on 87 octane, no emotional toll is taken. It’s possible you’ll pay extra to a mechanic, however to not a therapist. The alerts from Honda Sensing and Hyundai/Kia merchandise in regards to the automotive forward starting to maneuver and the instructions from the navigation system in a Mercedes as you miss three turns in a row aren’t indicators that the car is getting huffy. Any sense that there is an elevated urgency to the flashing warnings or a change of tone is pure creativeness on the driving force’s half. Ascribing feelings to our automobiles is straightforward, with their quadruped-like proportions, regular companionship, and eager-eyed faces. However they do not have emotions—not even the lovable ones like Austin-Healey Sprites.

How you can Motor with Manners

What is going to occur once they do? Will a automotive that is low on gasoline declare it is too hungry to go on, even once you’re late for sophistication and there is sufficient to get there on fumes? What occurs in case your automotive falls in love with the neighbor’s BMW or, worse, begins a feud with the opposite neighbor’s Ford? Can you find yourself with a scaredy-car, one that will not go into unhealthy areas or out within the wilderness after darkish? If that’s the case, are you able to pressure it to go? Can one be merciless to a automotive?

“You are taking all of it the best way to the top,” says Mois Navon, a technology-ethics lecturer at Ben-Gurion College of the Negev in Beersheba, Israel. Navon factors out that makes an attempt at creating consciousness in AI are a long time deep, and regardless of Lemoine’s ideas and my flights of fancy, we’re nowhere close to computer systems with actual emotions. “A automotive would not demand our mercy if it could possibly’t really feel ache and pleasure,” he says. Ethically, then, we needn’t fear a couple of automotive’s emotions, however Navon says our habits towards anthropomorphic objects can mirror later in our habits towards dwelling creatures. “A buddy of mine simply purchased an Alexa,” he says. “He requested me if he ought to say ‘please’ to it. I mentioned, ‘Yeah, as a result of it is about you, not the machine, the follow of asking like a good individual.’ “

Paul Leonardi disagrees—not with the thought of behaving like a good individual, however with the thought of conversing with our automobiles as in the event that they had been sentient. Leonardi is co-author of The Digital Mindset, a information to understanding AI’s position in enterprise and tech. He believes that treating a machine like an individual creates unrealistic expectations of what it could possibly do. Leonardi worries that if we speak to a automotive prefer it’s K.I.T.T. from Knight Rider, then we’ll anticipate it to have the ability to remedy issues the best way Okay.I.T.T. did for Michael. “At the moment, the AI shouldn’t be subtle sufficient that you might say ‘What do I do?’ and it might recommend activating the turbo enhance,” Leonardi says.

Understanding my must have every little thing diminished to TV from the ’80s, he means that as an alternative we follow chatting with our AI like Picard from Star Trek, with “clear, specific directions.” Acquired it. “Audi, tea, Earl Gray, scorching.” And simply in case Lemoine is true: “Please.”

Source link