[ad_1]

As curiosity round giant AI fashions — significantly giant language fashions (LLMs) like OpenAI’s GPT-3 — grows, Nvidia is trying to money in with new totally managed, cloud-powered companies geared towards enterprise software program builders. Right this moment on the firm’s fall 2022 GTC convention, Nvidia introduced the NeMo LLM Service and BioNeMo LLM Service, which ostensibly make it simpler to adapt LLMs and deploy AI-powered apps for a spread of use circumstances together with textual content technology and summarization, protein construction prediction and extra.

The brand new choices are part of Nvidia’s NeMo, an open supply toolkit for conversational AI, and so they’re designed to attenuate — and even get rid of — the necessity for builders to construct LLMs from scratch. LLMs are ceaselessly costly to develop and prepare, with one latest mannequin — Google’s PaLM — costing an estimated $9 million to $23 million leveraging publicly accessible cloud computing sources.

Utilizing the NeMo LLM Service, builders can create fashions ranging in measurement from 3 billion to 530 billion parameters with customized knowledge in minutes to hours, Nvidia claims. (Parameters are the elements of the mannequin realized from historic coaching knowledge — in different phrases, the variables that inform the mannequin’s predictions, just like the textual content it generates.) Fashions may be personalized utilizing a way referred to as immediate studying, which Nvidia says permits builders to tailor fashions skilled with billions of information factors for specific, industry-specific purposes — e.g. a customer support chatbot — utilizing a number of hundred examples.

Builders can customise fashions for a number of use circumstances in a no-code “playground” surroundings, which additionally provides options for experimentation. As soon as able to deploy, the tuned fashions can run on cloud cases, on-premises methods or via an API.

The BioNeMo LLM Service is just like the LLM Service, however with tweaks for all times sciences prospects. A part of Nvidia’s Clara Discovery platform and shortly accessible in early entry on Nvidia GPU Cloud, it consists of two language fashions for chemistry and biology purposes in addition to help for protein, DNA and chemistry knowledge, Nvidia says.

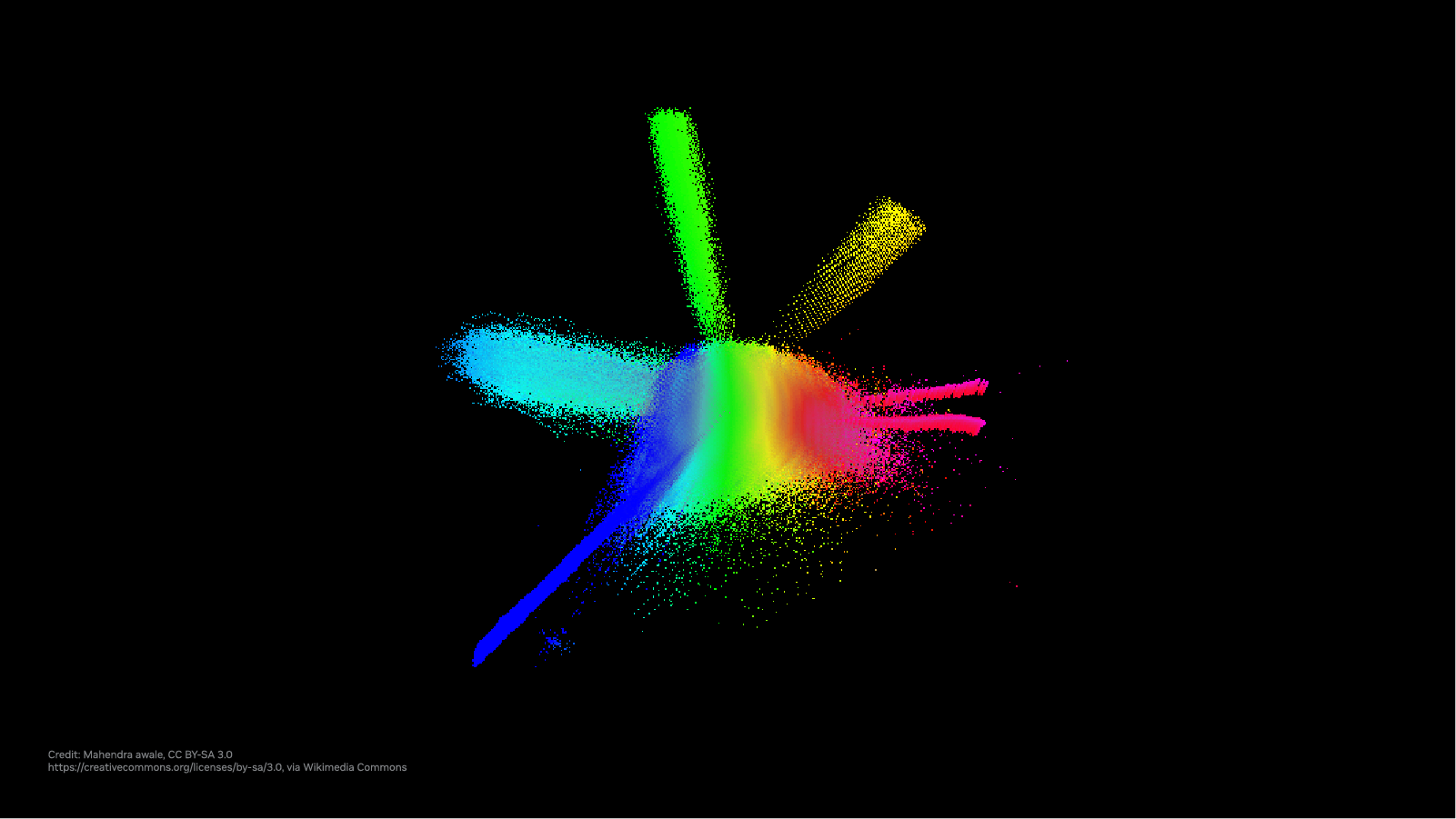

Visualization of bio processes predicted by AI fashions.

BioNeMo LLM will embody 4 pretrained language fashions to start out, together with a mannequin from Meta’s AI R&D division, Meta AI Labs, that processes amino acid sequences to generate representations that can be utilized to foretell protein properties and features. Nvidia says that sooner or later, researchers utilizing the BioNeMo LLM Service will have the ability to customise the LLMs for increased accuracy

Latest analysis has proven that LLMs are remarkably good at predicting sure organic processes. That’s as a result of buildings like proteins may be modeled as a form of language — one with a dictionary (amino acids) strung collectively to type a sentence (protein). For instance, Salesforce’s R&D division a number of years in the past created an LLM mannequin referred to as ProGen that may generate structurally, functionally viable sequences of proteins.

Each the BioNeMo LLM Service and LLM Service embody the choice to make use of ready-made and customized fashions via a cloud API. Utilization of the companies additionally grants prospects entry to the NeMo Megatron framework, now in open beta, which permits builders to construct a spread of multilingual LLM fashions together with GPT-3-type language fashions.

Nvidia says that automotive, computing, training, healthcare and telecommunications manufacturers are at the moment utilizing NeMo Megatron to launch AI-powered companies in Chinese language, English, Korean and Swedish.

The NeMo LLM and BioNeMo companies and cloud APIs are anticipated to be accessible in early entry beginning subsequent month. As for the NeMo Megatron framework, builders can attempt it by way of Nvidia’s LaunchPad piloting platform at no cost.

Source link