YouTube’s ‘dislike’ and ‘not ’ choices don’t do a lot in your suggestions, research says • TechCrunch

[ad_1]

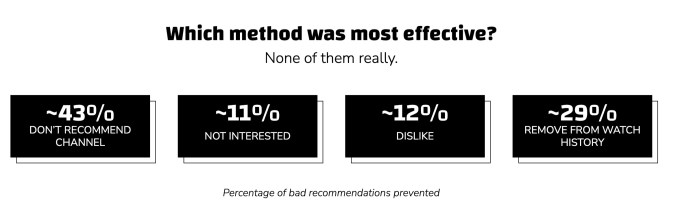

There isn’t a secret that each viewers and creators are confused by the puzzle that’s YouTube’s suggestion algorithm. Now, a new study by Mozilla means that customers’ suggestions don’t change quite a bit after they use choices like ‘dislike’ and ‘not ’ to cease YouTube from suggesting related movies.

The group’s research noticed that YouTube served them with movies much like what that they had rejected — regardless of folks utilizing suggestions instruments or altering their settings. When it got here to instruments stopping dangerous suggestions, clicking on ‘not ’ and ‘dislike’ was largely ineffective because it solely prevented 11% and 12% of dangerous suggestions respectively. Strategies like ‘don’t suggest channel’ and ‘take away from historical past’ ranked greater in effectiveness, chopping 43% and 29% of dangerous suggestions respectively. General, customers who participated within the research have been dissatisfied with YouTube’s skill to maintain dangerous suggestions out of their feeds.

Mozilla’s research took information from 22,722 customers of its personal RegretReporter browser extension — which lets customers report ‘regrettable’ movies and management their suggestions higher — and analyzed greater than 567 million movies. It additional took an in depth survey with 2,757 RegretReporter customers to higher perceive their suggestions.

The report famous that 78.3% of contributors used YouTube’s personal suggestions buttons, modified the settings, or prevented sure movies to ‘educate’ the algorithm to counsel higher stuff. Out of people that took any type of steps to regulate YouTube’s suggestion higher, 39.3% stated these steps didn’t work.

“Nothing modified. Typically I might report issues as deceptive and spam and the following day it was again in. It nearly feels just like the extra adverse suggestions I present to their solutions the upper bullshit mountain will get. Even if you block sure sources they ultimately return,” a survey taker stated.

23% of people that made an effort to vary YouTube’s suggestion gave a combined response. They cited results like undesirable movies creeping again into the feed or spending a whole lot of sustained effort and time to positively change suggestions.

“Sure they did change, however in a foul method. In a method, I really feel punished for proactively attempting to vary the algorithm’s habits. In some methods, much less interplay supplies much less information on which to base the suggestions,” one other research participant stated.

Mozilla concluded that even YouTube’s simplest instruments for staving off dangerous suggestions weren’t adequate to vary customers’ feeds. It stated that the corporate “isn’t actually that desirous about listening to what its customers really need, preferring to depend on opaque strategies that drive engagement whatever the finest pursuits of its customers.”

The group really useful YouTube to design easy-to-understand consumer controls and provides researchers granular information entry to higher perceive the video-sharing web site’s suggestion engine. Now we have requested YouTube to offer a touch upon the research and can replace the story if we hear again.

Mozilla carried out one other YouTube-based research final yr that famous the service’s algorithm suggested 71% of the videos users “regretted” watching, which included clips on misinformation and spam. A couple of months after this research was made public, YouTube wrote a blog post defending its resolution to construct the present suggestion system and filter out “low-quality” content material.

After years of counting on algorithms to counsel extra content material to customers, social networks together with TikTok, Twitter, and Instagram try to offer customers with extra choices to refine their feeds.

Lawmakers the world over are additionally taking a more in-depth take a look at how opaque suggestion engines of various social networks can have an effect on customers. The European Union handed a Digital Services Act in April to extend algorithmic accountability from platforms, whereas the U.S. is contemplating a bipartisan Filter Bubble Transparency Act to handle an identical situation.

Source link